Understanding Analysis of Variance

What is ANOVA? How to perform it by using SPSS.

Definition and characteristics of ANOVA

It is a statistical method that separates variance data into different components where additional tests are performed. One-way ANOVA is usually used for three or more groups of data and information handling. The relationship between dependent and interdependent variables is continuously contributing to the level of continuous measurement. ANOVA is a parametric test that is conducted on some assumption where the data is distributed normally. ANOVA divides variance into groups with the sources of variation management. For example, one-way ANOVA is associated with estimation procedures such as variations within groups and defining differences among means. ANOVA tests need certain analyses to be fulfilled such as interval data of dependent variable management and normality. The responses for each factor level are normalized of the population distribution. The distributions have the same variance and the data are independent. In the case of three-way ANOVA, the normality turns out to extract each sample from normally distributed population management. Sample independence occurs while each of the samples drawn is non-dependent on other samples. The variance of data is observed in different groups to find out the same set of records. ANOVA is determined through the use of significant differences between three or more unrelated groups in statistical analysis. Statistical analysis highly focuses on the interdependence management of the assessment of variables. For students exploring complex statistical methodologies, seeking statistics dissertation help can provide valuable insights and guidance in navigating such detailed analyses.

It means that two populations are unequal or it could be different as the probability of observing the result is as big as the one which can be obtained from the experiment and assumptions are made based on a null hypothesis. ANOVA is an odd technique that is called "Analysis of variance" rather than "Analysis of means". Comparison of the group means interpreting the key results of one-way ANOVA. Examining the group means along with comparing the group means are associated with a model that fits a process data. P > 0.05 is the probability that the null hypothesis is true. The Sum of squares is the column with no repeated measures. Variation in statistical analysis and the amount which is due is calculated for all the columns. The level of statistical significance is expressed as the so-called p-value and it means that there are 3% chances associated with finding larger values or the null hypothesis result which is true. Calculating the degrees of freedom is essential for statistical significance identification.

Two-way ANOVA is identified to be an important aspect where the mean of the quantitative variable is optimized and the levels are associated with two categorical variables. ANOVA tests are conducted for knowing how dependent variables are combined. "ANOVA test is a statistical test used to determine the effect of two nominal predictor variables on a continuous outcome variable". Differences of groups are identified and the main effect underlies one of the different groups. Each dependent variable is having a main effect wherein independent variables are ignoring the effects of others. How testing is posted in case the group has more than two levels and interpreting the meaning is ANOVA tests. Two way ANOVA technique is best for given known sets of data and each factor is measured separately. The measurements are thus found to be not repeating values. The primary purpose of two-way ANOVA is to determine if there is any relation observed between the important factors. ANOVA is being successfully applied in fields like economics, biology, education, and different disciplines. Agricultural fields are also being managed by the usage of ANOVA such as production and types of fertilizers used.

ANOVA SPSS

In statistical analysis and variance, SPSS is used for examining the difference in the mean values of dependent variables that are associated with controlled independent variables. The influence of uncontrolled independent variables is managed via using ANOVA in SPSS. ANOVA in SPSS has to be categorical. SPSS analysis is an integrated part of the dissertation sample. Aligning theoretical frameworks, gathering articles, and synthesizing gaps are completely normalized with academic writing such as SPSS based ANOVA. For scholarly writing, all sorts of sources are cited and each work is done authentically for addressing the committee feedback and reducing revisions. In ANOVA in SPSS, categorical independent variables are called factors that need essential management. For this analysis purpose, the menu is to be chosen as "compare means" and then click on "one-way ANOVA”

SPSS services are essentially identifying the variable dependency. X.X is identified as a categorical variable with C categories. The sample size in each category of X is usually denoted as n. Hence, the sample size becomes N=nxc. The next steps in ANOVA of SPSS examine the difference among means and decomposition is facilitated. ANOVA in SPSS is to measure the effects of X on Y and it is generally measured by the relative magnitude development and categorical analysis of X values in SPSS.

How to Use the Two-Sample t‐Test? What are the benefits of SPSS in conducting a Two-Sample t-Test?

Using two-sample t-test

Two sample t-tests

Two sample t-tests are identified as independent samples of the t-test. It is a method used for the determination of the unknown populations of two groups that are equal to each other. In two-sample t-tests defining a hypothesis is done and there are sets of null and alternative hypotheses engaged with the significance levels of the t-test. Finding out degrees of freedom is essential in conducting these certain tests. Computing test statistics and p-values are important for understanding if two population means are equal or not. Common applications to the tests are observed as a process of treatment that is superior to current components. The variation on these tests can have a difference of output which will be termed two-tailed. Student's t-tests are ascertained if the null hypothesis is accepted or rejected for the matched pairs. The p-value is the probability that the difference between the samples means is managed to be as large as it has been observed. A 1-sample sign test for paired data is associated with checking whether the average differences between two samples are due to random chance incorporation. In contrast with the normal t-test, there is dependency underlies while pairing two groups and it needs to be considered as a prime factor.

Hypothesis test

Statistical testing includes hypothesis testing which is so far identified as an important aspect of conducting meaningful surveys and obtaining results. The hypothesis test is analyzed as a part of variance and similarities management in several groups. They are conducted upon the management of a t-test while the mood's median compares two population samples. Hypothesis testing examples can be found out in terms of experimenting with a particular drug's effectiveness.

Paired t-test

Paired t-tests are used when the difference between two variables for the same subject is chosen and it tests whether the mean-variance is zero or not. Two variables are separated by time and as a part of the experiment, the data sets of 2010 and 2020 can be undertaken for each subject with cholesterol levels. Paired t-test is a method that is applied before and after measurement calculation in a group of people. In case there is a lack of normal data distribution then nonparametric tests are conducted which do not assume normality.

Factor analysis

SPSS consulting services are highly associated with factor analysis in dissertations related to statistics. SPSS help can be gained in several online community forums. Here, an expert team is ready to focus on any problems defined by the sample teams, and the factor analysis is done by performing various tests. Starting from the analyze menu, SPSS continues till the complexity reduction of data sets. Usually, data reduction is chosen from the menu where the non-verbal distinctions with appropriate subsets and grouping are taken into consideration. SPSS analysis applies for factor analysis as well as the two-sample tests. SPSS is identified to be extracting as many factors one can have as variables. A cut-off value of 1 is generalized and used for the determination of factors based on eigenvalues. Factor analysis in SPSS is done by clicking analyze the dimension reduction and finally clicking on factor in the software. Though the software is having multiple benefits it is still not capable of calculating factors like depression, and IQ. Uncovering the latent structure set of certain variables is done based on targeting the attribute space of a large number of variables within a non-dependent procedure. Factor analysis in simple terms defines the process of mass data management and shrinking to a smaller set that is more manageable and more understandable.

Continue your exploration of Univariate Analysis of Variance with our related content.

Benefits of SPSS in conducting a Two-Sample t-Test

A null hypothesis is most tested and the whole independent t-test is conducted. It provides a conclusion of having no significant difference between the two groups of the process. T-tests are commonly used to process statistical differences between the means of two groups. Two intervention types are managing the two change scores for managing the populations of two groups which might be equal or unequal. P-value is prioritized in these tests to be found out and it is labeled as "sig" which stands for significance level. SPSS analysis is conducted to extract data from any type of file and use them for report generations. Descriptive statistics and conducting complex statistical analysis are possible with managing the scale of measurement and random sampling. Adequacy of sample size and data distribution normality is also working on the variance or standard deviation. The estimated standard error is an aggregate measure of the amount of variation found out in both groups of data. An English-like common language in SPSS helps in making the ease of access for the SPSS data survey. The two-sample t-tests have limitations of performing less with small samples, on the other hand, performing better with large samples in this particular case.

What are the steps to conduct Discriminant analysis using SPSS?

This method is suitable for data analysis with no certain trend and seasonal pattern. The correlation coefficient indicates to the extent where two variables are moving together. The dissertation includes qualitative research aspects to be included in the analysis. SPSS is a massive utility software that helps in analyzing and classifying the components of data. Quick measurements are provided as part of significant value measurement within the process of discriminant SPSS. Discriminant analysis is performed using a 7-step procedure:

Collecting training data

Prioritizing probability

Estimating parameters of conditional probability

Computing discriminant functions

Using cross-validation for estimations of misclassification probabilities

Unknown group membership classification

Discriminant function analysis works with the identification of statistical procedures working on the normalization components. Discriminant analysis is research that is identified as a versatile statistical method that classifies the observations in more than two categories. All steps and variables are reviewed and the determination of discrimination groups are supposed to be contributed the most. Next comes the roles of Wilk’s lambda which is a measure of how the function separates into case groups. The proportion of total variance in discriminant scores is finding out the root of each function. It indicates better functional differentiation and eigenvalue enlargement. The focus is centrally associated with a proper conjugation of the structure matrix in discriminant analysis. Discriminate tests are aligned with a division of three groups based on the types of information one has provided. Correlation comparison and how well each variable is related to functions is observed under affective, descriptive components. Discriminate testing, two-point discrimination are types of this particular process. The ability to discriminate between samples of different sources and indulge with sensitivity components is an important part of this process. SPSS analysis takes charge of the performance management of components. Discrimination testing is associated with a data analysis process that provides the reliability of components. Qualitative analysis is important in discrimination testing and product decision-making.

The crucial steps of conducting a discriminant analysis are associated with DV, IVs, and SPSS as final steps. DV is a grouping variable that hits a defining range, specifically the lowest and highest values of grouping. A discriminant group is applicable within class score probation and plotting combined attributes. Non--missing and pooled attributes fall under predictor groups and membership needs to be specified as an important element. Differences in DF2 were found in pairs that work on information management and reviewing analysis components. For example, an educational researcher may wish to discriminate between more than two naturally occurring groups where the school graduates might decide on attending a professional school or going on a certain training.

For new case engagement, multiple variables need to be considered for identifying discrimination between groups. Multivariate analysis and variance of case belongings are considered as important parts of the academic performance management and learning essential components with best predictions. Core examples of discriminant functional analysis include the customer service personnel and human resource department. Human resources want to understand the three job classification that appeals to types of personality. Employees are administered a psychological test that is part of taking interest in different outdoor activities and conservativeness. The number of functions is the lesser of (g-1) where g defines as the number of groups and P is identified as the number of discriminating variables. Discriminant analysis is for 2-group discriminant analysis and there are some cases where the variables are dependable on more than three categories in total. Linear discriminant functional analysis performs multivariate tests of differences between groups. The minimum number of dimensions is needed for describing these differences. Multivariate test of differences between groups includes parametric techniques to determine quantitative variables.

There are predictors that best discriminate formulas of analysis in excel. A basic quadratic formula underneath the square root symbol of b2-4ac. Discriminant specifies a statistical solution that might have two solutions, one solution or no solution. Discriminant analysis is a regression that is associated with relationship management between different types of independent variables and the dependent variable in continuation of calculation for the linear regression. Discriminant functions are found to be statistically significant and explain the variance within 31.4%. Normal discriminant analysis is a generalization of the regression technique. The sequential one-way discriminant analysis includes the identification of discriminating power between the groups. One-way discriminant analysis is associated with fisher's discriminant coefficients. Fisher originally developed an approach to identify the species to which plants belong. SPSS syntax for a sequential one-way discriminant analysis specifies the handling of inclusion levels. This inclusion level is occurring at 99 to 0 where level 0 is never included in the analysis. Statistical tests are used to predict upon the single categorical variables which are associated with continuous action-taking. Presentation of important data is done using discriminant analysis with SPSS and creation of latent variables for each function is done.

Difference between Analysis of Covariance (ANCOVA) & ANOVA

Definition of ANOVA

ANOVA is extended as an analysis of variance that is described as a statistical technique to determine the means of two populations. The amount of variation between the samples is bifurcated while some specific causes of dependency are found out.

Classification of ANOVA

One-way ANOVA: One factor investigates the difference factor among the available categories with a possibility of having n number of values.

Two-way ANOVA: Two factors are included in the investigation process simultaneously to measure analysis of the influential variable

Definition of ANCOVA

It stands for analysis of covariance, which is an extended form of ANOVA. The elimination of effects is done before research can be conducted. ANOVA and regression analysis is important working point considering the variability of all other variables.

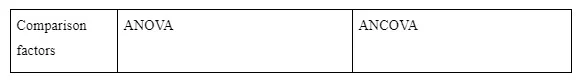

Key differences of ANOVA and ANCOVA work into the statistical components that are taken into consideration.

Differences between the chosen parameters

Analysis of covariance is applicable within a comparison of one variable with other variables present in the population while considering other variables. ANOVA is on the other hand used for comparing and contrasting more than two populations. ANCOVA is used in research, where the effects of some antecedent variables are removed. As an example pre-test scores can be defined which are used as covariates in pre-test and post-test experimental designs. In the case of ANOVA, the process helps in finding out the difference between groups of data that are statistically significant. Levels of variance include group samples taken from each of the components.

One-way ANOVA is used for the determination of statistically significant differences that is applicable for more than three independent groups. One-way ANOVA is the independent samples of t-tests that compare the means for two groups. Two-way ANOVA is used only when one measurement variable is working on two of the nominal variables. In simple words, while an experiment gets quantitative outcomes, there are two categorical explanatory variables presented that work on the appropriateness of ANOVA. For example, social media can be taken as an independent variable, and groups are assigned to low, medium, and high levels of social media users to find out if there exists any difference in sleeping hours per night.

Different types of ANOVA include uses of MANOVA which differs from ANOVA as the multiple dependent variables simultaneously assess the only dependent variable at a time. The p-value is the area that associates with a probability of observing a result as big as the one that is obtained from experiment F0. The null hypothesis seems to be true since the inferential statistic method concludes a population of a given sample set. The value which is calculated from the data given to a researcher is called the statistics or f-value. Now, in case a calculated f-value is found to be larger than the F critical value then the null hypothesis can be rejected easily. Three-way ANOVA has also been identified as effective in finance and social sciences. Comparison can be conducted based on the ANOVA uses, inclusion, and covariate functionalities.

The technique of identifying variance among the means of multiple groups is working on homogeneity. It is a part of variance that is used for taking off the impact within more than one metric-scaled undesirable variable. ANOVA can be used for calculating the linear and nonlinear model components. On the other hand, ANCOVA uses a single linear model. ANOVA characterizes group variations that are exclusive to treatment. ANCOVA divides between the group variation to treatment and covariate. ANOVA exhibits individual differences while the group variance individual differences are assigned to the components within regression analysis.

ANOVA SPSS is associated with large amounts of data processing in statistical analysis. Like the t-tests, are conducted for finding the differences between groups of data whichever is statistically significant. A covariate is identified as an independent variable that is looked upon to manage the "effects of the categorical independent on an interval dependent variable", which thereafter works on the covariate control. T-tests are an identified method that determines whether different populations are statistically different from each other or not. In this entire process, ANOVA and ANCOVA come handy within the SPSS software.

How to Interpret Multiple Regression Results in SPSS?

Multiple regression analysis is processed from the menu where the analysis and classification steps are recorded in SPSS. Determination of the association between the responses, which is statistically significant is analyzed. Multiple regression works upon the model that fits the required amount of data. Determination is done on the modeling components which meet the assumption's needs. Regression results are reported in SPSS as it was enabled for assessing the ability of age and stress level for predicting the quality of life. In order to report regression, it becomes important to report the R square at first. Beta values for the predictors and significance on the contribution of the model are conceptualized. Multiple regression is managed via the componential analysis of p values. Assumptions of multiple regression focus on the regression aspects of SPSS. At first, a person needs to click on the analyze file menu and select regression. The linear operations work on the regression dialog boxes.

Interpretation of regression coefficients determines the positive and negative components of the process. Data analytics works on positive coefficient analysis which shows a positive relationship within the coefficients of the standard error mechanism. The number is kept in parentheses. Multiple regression which is multivariate focuses on the relationship between more than one independent variable and more than one dependent variable. The difference between multiple and multivariate regression is associated with summarizing more than one predictor variable and more than one dependent variable. SPSS expert's help is associated with linear analysis and regression analysis to its core. A linear regression model extends to make an inclusion of more than one independent variable. It is called a multiple regression model since it is more accurate than simple regression conductance. Adjusted R-squared value is used in the determination process of the correlational reliability and how the addition of independent variables is performed.

Adjusted R-squared has informed regression that is compared to a model which gives additional input variables to be understood. The lowered adjusted R-square is in association with not adding the value to the model. As compared to a model with additional input variables it is observed that the F-value is quite significant in designing the probability of regression output. Next comes a discussion on the p-value which is less than 0.05 and identified to be a statistically significant one. A strong evidence-based analysis is conducted in this case that follows the null hypothesis and tells whether the regression model is a good fit or not. Conventionally the 5% chances are observed of being wrong. Most of the authors refer to the significant values which could be less than 0.001 values. Also these SPSS help in generating common outcomes that makes type 1 error and seems to come in senses at a tiny level. The null hypothesis rejects the areas where experiments are mostly focused on. SPSS output is observed to contribute to the model. Multiple linear regression analysis is conducted using SPSS. Variable selection is done using the analysis then regression and then clicking on the linear model. The score is moved to the dependency chart where a series of predictors and demographic action is encouraged. Sig value is calculated for managing the tolerance level and polynomial regression.

Key output included p-value along with managing the R2 and residual plotting conditions. Multiple logistic regression predicts the probability of a value of the Y variable within a function of x variables. One can measure the independent variables on new individuals and estimate the probability of having a particular value of the dependent variable management. Analyze button is clicked upon for access to the toolbar and it is necessary to select "multiple logistic regression" to conduct the test. Multiple variable analysis, as well as correlation analysis, works as an important stage of logistic regression analysis. SPSS is one particular software that helps in the management of variables and functional component organization which is possibly different in terms of explanatory components. Free licensing helps gain popularity for the software. It also enables concentrating on linear regression analysis.

Linear regression analysis works as the next step after the correlation is performed. It is used as a part of predicting the values which are based on the values of another variable. While multiple regression is identified as an extension to simple linear regression it works on the datasets that fall on the line that is drawn to best fit the data points. Simple linear regression is performed following the steps of visualizing the data, fitting it into a simple regression model. As step three it can be described to interpret the data sets and build the charts. Unstandardized B is identified as the constant of this process. Moreover, there is an unstandardized B that defines the hours. The p-values are identified by the "sig" which is also calculating the hours. Thus, any of the mentioned terminologies is easy to solve with an SPSS expert's help at a low cost.

Continue your journey with our comprehensive guide to Understanding Adverse Childhood Experiences.

- 24/7 Customer Support

- 100% Customer Satisfaction

- No Privacy Violation

- Quick Services

- Subject Experts