An Investigation into the Use of Facial Recognition Technology to Enhance Gamers’ Engagement

Introduction

Background Information

Today computerised gaming sector are considered one of the best tools for entertainment in the whole world. Players get involved in challenges when they play these games. As a result of the games, players can be able to manage and control all the unnecessary fears and challenges that come about with the games. Therefore, both negative and positive emotions create room for experiences in the game. In addition player’s emotions play a great deal when it comes to getting experience from the game. When players interact with factors such as mechanics, the game world it helps them to develop and build their user experience with the game. There are very many ways that can be used to increase user or players' experience and satisfaction when playing the game for example; this can be done with the objects/ the input devices. Manipulating game balancing is also another technic that can make a player get more interested in the game. Game balancing gives the user to test his/her experience in the game, which means that the games will give one an option to choose either to play an easy, hard and experienced game level. A game that is too difficult will frustrate the player, whereas a game that is too easy will bore the player. It is common for the player's facial expressions to communicate their feelings such as frustration, boredom, etc. The balancing mechanism could dynamically modify the game difficulty based on the player's reaction to the current game difficulty. For those seeking to develop a deep understanding of this fascinating space of gaming experiences, can provide the most authentic insights and guidance.

Gaming technology has improved over the years because of Artificial Intelligence (AI) that has made it possible for the industry to grow. This is because it has eased the work of game developers in that they can be able to create games that provide a lot of experience to the end-user depending on their ages, gender, experience, and capabilities (Ninaus et al., 2019). The gaming industry is one of the biggest industries that generate a lot of revenues in most countries such as the United States and the UK (Fitzgerald & Ratcliffe, 2020). This has been possible over the years courtesy of AI that has made sure that the game experience continues to improve to meet the end needs of the gamers/players. The AI through and modern computers have shown that it is possible to improve the user experience of game production to meet the high demand of the growing player's population (Ninaus et al., 2019). Nevertheless, much has not been done on how to improve the player's experience when they are playing a game. Therefore, the question one should be able to ask him/herself is that how can we use facial recognition technology to enhance gamers' engagement? The use of player's FER has been advocated in numerous works of literature to dynamically balance the difficulty of games. To prove the notions, just a few empirical tests have been carried out thus far. It is proposed that the Hack and Slash Game be balanced dynamically with the use of the FER algorithm (Bond et al., 2020). Even though the dynamic balancing method improved the player's experience in the game, we are not sure if it would have the same effect in other genres.

Objectives

The objective of this research paper is to investigate the use of facial recognition technology to enhance gamers' engagement. Because the user /the player is the most important part of a game, therefore, improving their experience would mean that many people will choose to buy or look for games to play since their needs have been carted for in the game.

Research Questions

The possible aim of this dissertation is to answer the following questions.

What is the importance of facial expressions in gaming?

How can facial expression improve gamer’s experience?

Will facial expression increase more demand in the gaming industry?

Chapter Two: Literature review

This chapter addresses the recent works of the literature that have been conducted regarding facial recognition technology to enhance gamers' engagement topic. It captures the concepts and technological advancement supporting the development and use of gaming world.

Facial Gaming Technology

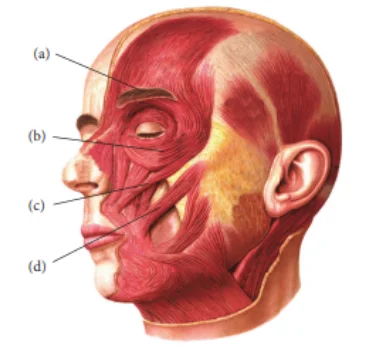

Game facial behaviour relies on the data obtained from the physical seniors or reactions from the players, for example it detects reactions from the visual methods or reactions to access the face of the player. It uses the electromyography (EMG) to measure the electrical activity of the facial muscles of a player; they are associated with smiling, eyelid control and frowning. There is evidence of more frequent corrugator activity when there are positive game events. Yu et al., (2021) presents evidence of more frequent corrugator activity when positive game events occur. Ninaus et al., (2019). There is an increase in activity of the zygOLIC muscle related to self-reported positive emotions. This time, also, Sharma et al. (2019). Positive and rewarding game events are associated with the increase of orbicularis oculi EMG activity. It is helpful to consider that approaches can be more resilient to varied lighting conditions and facial problems than it is to be obtrusive because of the necessity of physical sensors on the subject. The analysis of facial behavior can be carried out without any physical contact, when using automated visual methods. The process usually involves face detection, place of location and classification of facial features into facial expressions. There's a proverb: "A bird in a bucket." A Classification approach is based on distances and landmarks.

(a) Corrugator supercilii. (b) Orbicularis oculi. (c) Zygomaticus minor. (d) Zygomaticus major

The distance among face points allows for training a Support Vector Machine model. Likewise, Manca et al. (2021). The 12 distances calculated from 14 landmarks add up to fear, love, joy, and surprise. A case study of Khazaal et al., (2019) For example, eyebrows could be calculated from facial distances calculated in lines in key regions of the face generated by the MPEG-4 animation standard.

Huynh et al (2018) each use up to 30 Euclidean distances. Multiple facial landmarks are gathered from 3d face models to confirm the 6 universal facial expressions. In order to classify emotions by utilizing a correlation-based feature selection technique, they must be indistinguishable from each other. The two AkaKn and SanKurt use the same trajectories of facial landmarks to see head gestures and facial expressions. Manual or automated FACS analysis is a standard for categorization and measuring of emotional expression in some visual methods. Huynh et al., (2018) During the analysis of video recordings of subjects in a game playing a stress part and neutral part, more AU were reported by manual Fact, which is considered the gold standard for reporting stress in sport. Authors report lip pull corner and inner/outer brow raise more frequently in gaming sessions. Dewan et al., (2018) use facial analysis from the FACS systems to create an appraisal of a subject's emotional state. Akbar et al., (2019) An automated analysis can be used to check the relationship between facial expression and learning in tutoring sessions. Gamage & Ennis (2018) They used an empirical facial analysis with the author’s view of the scene to build their model, rather than rely on FACS.

The majority of the time the subjects are only with a neutral face. The literature disagreed about whether facial expressions served as a single source of information. The report states that when playing a game in which a neutral face is presented, your head movements and hand gestures will capture your frustration, not by face expressions. The conclusion was the same as with similar by Shaker et al. (2018). Head expressionivity is an indicator of how experienced one is on games. It's indicative of failing the game with the high frequencies and constant motions of the head. There are studies showing that Facial Analysis based on physical sensors is a better way to determine the structure of a head compared to both lighting and movement. The use of the sensor is good; it raises user's awareness of being monitored and also improves users experience during the game. The approach of video analysis is less intrusive than other approaches. Despite its usefulness of being a quantitative approach to measuring facial expression, manual application is very time consuming and requires certifies to inspect video recordings.

Facial expression game balancing

For decades now games have evolved to enhance the feel and the look of a game to increase the intelligence of the non-players of the game. For that reason, different researchers have conducted lots of research on the topic. The use of Augmented, Virtual, and Mixed Reality, as well as sensors, has also been proposed as a way to seamlessly combine the game's world with the player's reality search as, Camera, GPS, Accelerometer, Microphone, and Gyroscope. All of these strategies improved the player's immersion as well as their interactions with other players or objects in the game. As a result of this conclusion, engagements and greatness are crucial factors for building experience in the game and presenting it to players (Fitzgerald & Ratcliffe, 2020).

It is quite straightforward to improve the player's experience by enhancing the game's aesthetic and through facial expressions, but this strategy normally demands expensive graphics resources to accomplish. Most games have images and audio that contributes to the overall experience in terms of how the game feels or looks, for example, voice, sounds effect, and background music. Players' experiences can be improved by using virtual/mixed/augmented reality (Fitzgerald & Ratcliffe, 2020)) and sensors (Buil, et al., 2019). That way, gamers will be able to engage with both the actual world and the virtual environment in the game. As a result of technology limitations, inflexibility, and high implementation costs, using virtual/mixed/augmented reality and sensors to play the game has certain problems.

To improve the player's experience in the game, the agents' intelligence is usually increased, for instance, Non-Player Character, and the Enemies. Several strategies were offered to manipulate the intelligence of the game agents. To impart intelligence to the game agents, the most conventional and powerful way is to script Artificial Intelligence behavior for the game character or Non-Player Character (NPC). For example, path finding is a method of AI that has been well-solved for many years and is still utilized today. The topic of dynamic balancing is still in its infancy and no standard guidelines or methodologies exist for creating a dynamic balancing system in a game. To modify the game's difficulty, the dynamic balancing system adjusts various predefined factors in the game. To some extent, these factors can be changed based on the player's performance in real-time. When using this strategy, one may be sure that the difficulty of the game is just appropriate, no matter who you are or what you look like for instance, an expert in the game or a casual gamer. A player's gaming experience increases as a result (Grau-Moya et al., 2019).

Since their beginnings, video games have used adaptive techniques based on player performance data (Chen et al., 2018). A game that is player-centric and adaptable is superior to static, non-adaptive gameplay if it can successfully match the player's basic cognitive traits. The difficulty level of the game is progressively increased based on the player's performance and skills displayed throughout gameplay. It's important to remember that playing video games is fundamentally an emotional experience, thus adapting the features of the game based on player performance is not enough. Schrader et al., (2017) researches on players' experience through the research (Yang et al., 2017) proposed that players should be given the chance or the power to make their own choices, decisions that affect which feelings they may feel instantly or later in the game. This power is only possible through facial expressions that will make sure that a user's experience and emotions are well balanced during the game. Affective games, on the other hand, rely heavily on emotionally-based adaptation methods to alter individual game components and parameters acceptably.

The most emotional game design

Emotional games were first introduced by Eva Hudlicka (De Smale et al., 2019). The idea was borrowed from the Affective computing game. However, (Yang et al., 2019) was the first person to coin the term emotion to recognize and categories the emotional patterns and human effects such as processing, interpreting, recognizing, and simulating those effects (Kokkinidis et al., 2021). Pozo, (2018) researched how facial expression based on emotional notion can help to improve the expression of emissions of game players. Therefore, emotional gaming design is very important in creating and implementing, processing, interpreting, recognizing, and simulating the experience of gamers in a given game (De Smale et al., 2019).

Players' experiences are improved by implementing an effects aspect in a game (Lämsä et al., 2018) search as introducing facial expression in game development. The ability to recognize, process, interpret and simulate emotions and feelings to the agents such as NPCs, and Enemies is one of the ways are given for designing an emotional game (Walk et al., 2017) that can be controlled by facial expression. Player impacts can be captured using sensors such as a camera, or a microphone and then analyzed by the agents to be understood by them. The agents' emotions will be simulated by computer models based on their internal feelings, emotions, and interpreted emotions. It is also important that they show their feelings through their activities for instance, through their words, voice pronunciation, and facial expressions (Walk et al., 2017). The approach of capturing the player's impacts can be used to dynamically modify the complexity of the game. It is possible to lower game difficulty variables, for example, when a player shows a stressed or anxious countenance. Face Expression Recognition (FER) in the game allows you to do just that by analyzing the facial expressions of players (MUñOZ & Dautenhahn, 2021). For example, the facial indications that players give off during the game are automatically interpreted by the algorithm. To date, only a few practical studies have been conducted to establish the concepts of FER for dynamic balancing in the gaming world.

As far as the usage of facial expressions for user experience analysis, there have only been a few studies conducted in non-game applications (Walk et al., 2017). Positive and negative expressions of consumers of an online shopping website were evaluated in (Kokkinidis et al., 2021). To determine the emotions of users performing basic computer operations using an automated facial expressions analysis system (Gennari et al., 2017). A high degree of correlation was discovered between the system's findings and expert analysis. It has also shown encouraging results in generic emotion detection based on facial expression recognition (MUñOZ & Dautenhahn, 2021).

Effective feedback research

Dawson et al., (2019) asserts that, users learn to control their physiological condition using bio-sensor data presented on a device or a designated medium in traditional biofeedback. Computers should be able to perceive, react, and alter our physiological state in a meaningful way, which is according to a study conducted by (Winstone & Carless, 2019). In the game Relax-to-Win, the player's galvanic skin responses are used to measure the player's current level of relaxation, and the speed of a dragon is controlled by the player's level of relaxation. Since the computer (but not its user) learns about how environments affect a person's psychophysiological condition, it can adapt its behavior accordingly (Nassaji & Kartchava, 2017). User involvement grows when their feelings are reinforced. But to react effectively to emotional expressions expressed by users, computers must either classify or estimate the physiological responses received from users in (McCutcheon & Duchemin, 2020) research. Winstone & Carless, (2019) sates when a computer or a PlayStation box can manage to balance or change levels according to user or player's experience, the players get attached to the game.

Emotional games are games that support the affective feedback loop, as opposed to the traditional biofeedback loop, which does not propagate uncontrolled emotive information. So long as players are skilled enough to manage their typical physiological responses, the affective game will be transformed into one with "straight-forward biofeedback," (MUñOZ & Dautenhahn, 2021). Games with "direct physiological control" are defined by (Winstone & Carless, 2019) as games where the player manipulates and controls actions in a simulated world by flexing muscles or gazing at an eyeball or using a temperature sensor (through blowing hot air on it). On the other hand, players' body activations such as heart rate and galvanic skin reaction are used to alter physiological games in an indirect (involuntary) manner. According to (McCutcheon & Duchemin, 2020), implicit and explicit biofeedback methods were used in a First-Person Shooter (FPS) game. As a result of this, the player's immersion was increased. Implicit control was based on the player's physiological condition and did not require the player to be aware of the changes. Based on notions from classical control theory, (MUñOZ & Dautenhahn, 2021) proposes a more precise processing model for player-centric adaptation in emotive video games.

Game Adaptions

According to the Affective Loop theory, game adaptation provides subtle reactions, which in turn elicits emotional responses in the player. Since emotions and physiological reactions are involved in affective game design, it is vital to identify possible targets for adaptation. Because real-time physiological reactions vary between players with varied gaming experiences and affective personality factors, (Henderson et al., 2019) proposed a farcical recognition approach that was to help in developing and improving a player's emotions while they are gaming. The farcical approach will manage to balance the emotional reactions of a player and in return create game an adaption and increases user experience. In addition, Gamers may be aided in any troublesome scenarios that arise during gameplay by using adaptive companions that change their behavior – from cautious to supportive to aggressive – depending on the player's experience (McCutcheon & Duchemin, 2020). Instead of increasing the difficulty level, the challenge me algorithm uses Dynamic Difficulty Adjustment (DDA) to balance the game challenge according to the player's abilities (Walk et al., 2017). Even if (Hey-Cunningham et al., 2021) just sketches out the last heuristic, it offers a great deal of promise for inducing desired emotions by altering the design aesthetics and multimedia content of a video game based on real player feelings. Player emotions are recognized by employing physiological signals in biofeedback-controlled games and surroundings (Henderson et al., 2019).

Using a positive feedback loop, traditional DDA techniques employ player performance as a basis for dynamic regulation of task difficulty (Hey-Cunningham et al., 2021). As a result of the player's performance, DDA can be implemented without the use of any additional devices. To add to the complexity of gaming, interactive emotional systems rely heavily on effective loop adaptation (Walk et al., 2017). Similar to (Carr et al., 2018), which integrated player fear input for DDA with traditional performance feedback for dynamic modification of game difficulty in a negative feedback loop, is (Henderson et al., 2019). It was shown that anxiety-based feedback Based on facial expression was more successful than performance-based feedback in terms of immersive and difficult gameplay.

To achieve this, DDA modifies Artificial Intelligence (AI) by selecting AI behaviors that are most relevant to the player's present abilities and emotions. There are several ways to modify NPC behavior, such as dynamic scripting (generating alternative scripts for intelligent agents based on the player's behavior), genetic algorithms for NPC building, or adaptive agents (Henderson et al., 2019). According to the player's progress in learning the game's skills, DDA adapts inventory levels for certain game contexts by modifying level content (game items). A DDA system developed on Valve's Half-Life game engine, Hamlet, allows users to alter the player's inventory through the use of (Buil, Catalán, & Martínez, 2019). To alter "on stage" game elements, reactive actions are employed; proactive actions are used to adjust items "off-stage".

There are also apps where users' emotional state is reflected in audio-visual effects, such as music players with affective features and online chat applications (Fitzgerald & Ratcliffe, 2020). They began by mapping the physiological responses of players to audio-visual game features using facial expressions to enhance the gameplay and display of a horror game. To alter ambient illumination in rooms within a video game, (Chen et al., 2018) used stochastic algorithms that took into account the player's heart rate and skin conductance, as well as other psychophysiological variables. Pozo, (2018) explored various psychophysiological approaches and sound design practices for creating greater emotional experiences in adaptive audio-centric gameplay, with a special focus on correlations between fear and game sound. Together with car steering and speed, road visibility was dynamically adjusted in a car racing game (Fitzgerald & Ratcliffe, 2020).

An alternative method would be to focus on probable emotional triggers in games, rather than vertically classifying the aims of dynamic adjustment of affective gaming into three tiers of design as stated above. As part of their research, (Fitzgerald & Ratcliffe, 2020) identified two key sources for probable emotional reactions in affective games: content adaptation and agent/NPC adaption. From a high-level perspective, they consider not only the game environment, plot, camera profiles, and audio-visual settings and effects, but also the game mechanics, dynamics, and any types of content that can be adjusted to a particular player's emotional state and, then, can affect player's gaming experience (Chen et al., 2018). To synthesize personalized experiences in entertainment and serious gaming contexts, the automatic and dynamic content-generating methodologies outlined above offer new potential for affect-driven game content creation (Ninaus et al., 2019). The adoption was to aid in the development of a facial expression mechanism that can help a user during the time that they are playing. Additionally, there are concerns about prospective targets for game adaptation to elicit emotional responses in a specific player. The affective loop also raises the question of emotion detection and interpretation. To detect a player's emotional state changes, it is necessary to construct and exploit an adequate representation of that player (Chen et al., 2018).

Our approach is to analyse the facial features of subjects with natural emotional responses to games. The only solution that can be found to improve the experience of game play for the users is with the use of facial recognition technology. The experience of configuration causes two distinct emotional states for subjects, namely boredom and stress, which are evoked from interaction with games rather than videos or images.

Chapter Three: Methodology

Introduction

In this chapter the research methodology was divided into two first we conducted a random survey using questionnaires and secondly the research adopted an experimental setup design to help this dissertation reach its end goal the experiment was both done physically and in online presentations, Where the responded t who answered to our surveys were shortlisted to take part in the experiment to help in concluding the research. The main reason to conduct the survey was to find the team who would be whiling to take part in the experimental setup. The dissertation employed the use of two different kinds of modified games, one was intend for the survey to answer the questionnaire and the other was meant for the experimental setup purpose.

Methods and Model

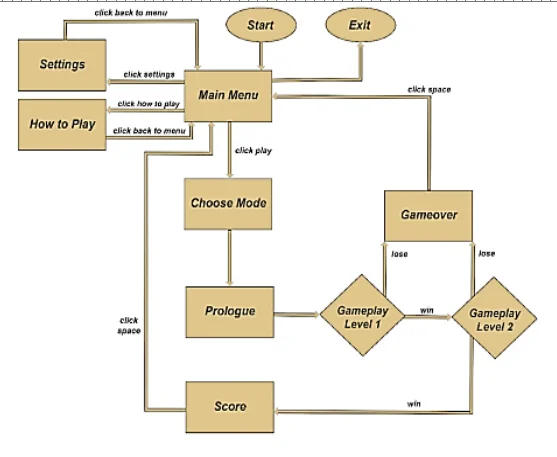

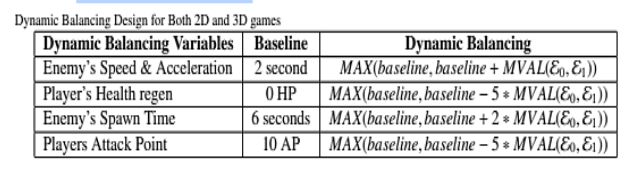

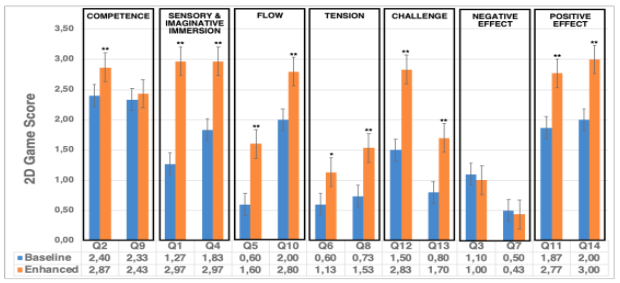

The general flow of the game in 2D and 3D is illustrated in Figure 1. Subsection 3.1 shows game and dynamic balancing system design for the2D game while section 3.2 shows the 3D system. The player has facial expressions that are categorized into two categories. The variables set will cause either one to raise or lower the difficulties. The set value of E0, e1 is defined by the maximum values of anger and patience captured from the system. Themaximum value of expressions between Smile and Relaxed is calculated from the facial signals of the player.

The first group that played the 2D game was the people who had deployed the games. The respondents were told to play the 3D game. Two versions of the game were played: a version with and with no dynamic balancing system. The survey respondents were not given information about the dynamic balancing system or the order between with and without it. Some respondents went first with the dynamic balancing system and then without one of the balancing systems. The others played a game with no balancing system. The Game Experience Questionnaire was designed to get the input of respondents every time they finish a game.

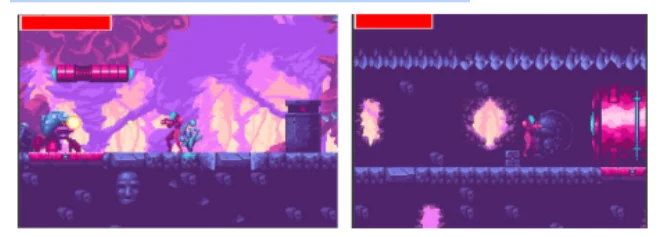

Fighter 2D game; Alien

The game Alien Fighter is set outside the bounds of outer space as part of the Alien theme. A person holding a gun is controlling another with a group of alien in an unknown place. Pass all the levels by defeating all enemies and the boss, that's the goal of the game. There are 2 videos of the Alien Fighter. The dark and green theme used for the game is reminiscent of outer space, and definitely not a good idea for a video game. The third-person view will be used in the game, where player should follow the path they were given to do in order to defeat enemies. The player will have to fight the level boss at the end of the path. The two levels in the game will allow for a more relaxed experience for the player while also leaving plenty of time for experiment. The player had a keyboard and a mouse. The input with a camera is from the dynamic balancing system.

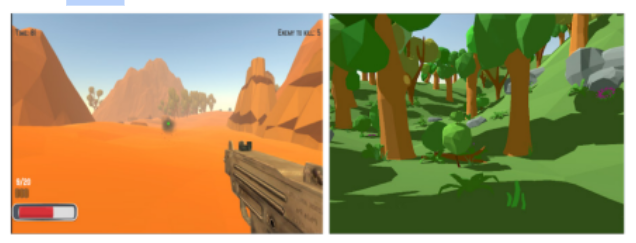

Rushing Escape: The 3D Game

Rushing Escape is a 3D game with an Alien theme. The colour theme in this game is bright enough to make you feel adventurous. In comparison with the 2D game, the camera is first-person view. The player has a lot of freedom to explore the world. There is a goal in the 2D game to find a purple diamond in a place that is far away from the player. If the player is unsuccessful in finding a diamond, the enemies will move towards them, creating a situation involving ring a player who will be attacked. The boss will come to fight the player, if he had killed 30 or more enemies. Similar to a 2D game however, the level of the game is set upon two levels so that it does not take so long for the experiment to end but also gives the player enough time to enjoy the experience. The 2D game's inputs are identical to those used in this game.

Experimental

During the research the subjects were seated alone in a room while being recorded and measured by a heart rate sensor using a camera and computer. The camera was on a tripod that was 0.6 m from the subjects, while the camera tilted slightly to the left. The light source used in the experiment was a spotlight and used at a distance of 1.7 m from the subject and 45 cm higher than the camera level. Affective 23 was used in the creation of the FER system. The real-time player facial expression recognition is made possible thanks to Affective and the FER system. The game mechanics are influenced by the emotions and facial expressions that were detected. Both games have a goal, some enemies and a boss. Dynamic balancing for both games includes enemies speed & acceleration, player’s health region, enemies spawning time, and player's damage-per-hit. In the 3D game, the player has the option of exploring the world outdoors, but they need to follow the path laid out in the game.

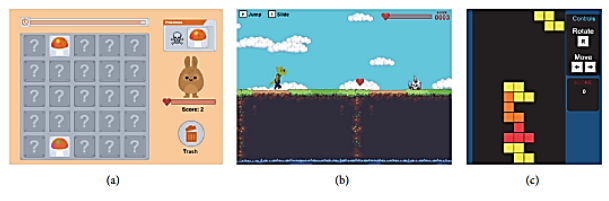

Figure 6 depicts the games utilized in the investigation. From (a) to (d), write: Mushroom, in which the player must distinguish between good and bad mushrooms by analyzing color patterns; Platformer, in which the player must leap over or slide through obstacles while collecting hearts; Tetris, a clone of the original game, but with no indications about the next piece to enter the screen.

Pilot study – an online personalisation

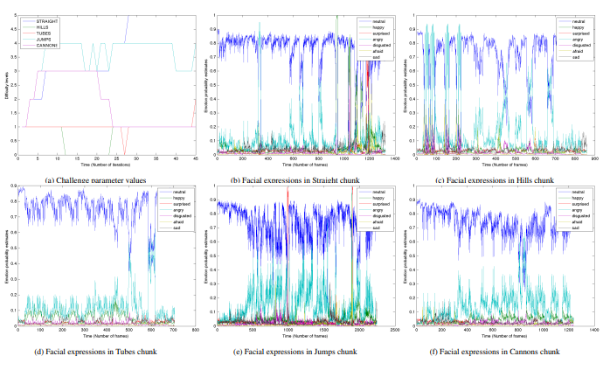

The research analysed the personalisation of the participants who answer the survey to take part in the online gaming study. The participants were placed in a room that had a well-balanced light system, their work was to interact or play the Mario game as was instructed, and they were asked to be free and comfortable for them to play just the same way they would have done when they were at home, also they were asked to avoid any unnecessary face blocking through moving their hands to the air or drinking coffee during the gaming experiment (Chen, Konrad, & Ishwar, 2018). The participants were also instructed to start the game at level one, the hypothesis for the study was at the value of 1; hence if the participant plays the game accurately the game was automatically changed to the next level after they have managed to complete the first level (Yang et al., 2020). Additionally, the automatic changing of the level was to happen according to the facial expression of the face changing of the user. Hence the figure below illustrates the obtained results from the participant the figures are named (3b-3f) respectively.

During the experiment, we managed to observe the general trends where the algorithm levels had to change due to the effects of the per chunk challenge levels as shown in (3f) the chunk levels changed when they detected any facial expression on the user's face and they moved from easy to difficult or easy to medium depending on how the user reacted to the game (Cai et al., 2018). That means that the users' expression was purposely meant to either neutralise the game or increase the challenge levels. That means that the online experiment was successful. For instance, in 3a the cannon chunk levels changed/increased from low to high because of the highly increased neutralise level of the gamer/ players which is the same case with (3f). Then as the emotional level decreased from being angry to normal the game levels dropped for example from hard to easy (Zhang et al., 2018).

Additionally, it was also noted that higher participants' anger during the game also dropped the game levels to balance the emissions of the player (Zhang et al., 2018). And also when the participants stop being angry during the game the levels also were getting balanced. However, in figure 3a it was observed that the online personalisation method became stable in the presence of classification noise of cause that is after approximately 1000 classified frames, where the player would suddenly start deploying mixed reactions and the game denotes that it either the player is talking or moving too much. Some players like to react with their bodies and show reactions through shouting or screaming. Moreover, as was expected the challenge level were all interlinked as shown in figure (3a)

Pairwise Tests

During this online experiment, we aimed at understanding how human participants reacted/ feel when laying the games under personalised game experience. This was done in comparison to the baseline of a realistic statistic game. In this experiment, we employed Chen, Konrad, & Ishwar, (2018), procedure to enquire about the pairwise preferences during the study. For example, here we used a system of preference that chose between (A or B). A methodology, hence questioners were also used here to help in getting the actual results that were intended for the study, After that, we then move ahead to conduct a pairwise test for the systems that were presented after the questionnaire was duly field (Hileman, & Bodin, 2019). Used a static system s With a stable difficulty level that was not to change with a personalised system p. the experiment took the design of a controlled system that was designed randomly.

The experiment performed three pairwise tests in chronological order to avoid baseness. The static system and the personalized system are compared, in two ways, starting at different challenge levels of easy, normal and hard this is the sequence that was noted during the gameplay. This assignment was performed with the participants who responded to our invitation request (Grau-Moya et al., 2020). Each of the three game-playing sessions is ended after a max of 2minutes so that user fatigue won't affect the experimental results. (Table 1), shows the data collected after the play. Predicting participants' preferences at an initial challenge level, the legends said that either the personalise system or the static system are preferred by both and that neither is preferred.

This was arranged in a segment of four levels with approximately three minutes of play for each player. Additionally, the participants were requested to play 2 games each then they were asked questions according to their experiences in gaming and the levels. They were to answer a preferences questionnaire from whereby they were only to indicate their preference during the game i.e., (s is preferred tp,p,p is preferred tp s) and the question presented to the participants, was in the three levels in which game mode did you find the challenge so accommodative to you? After that the data was recorded in table 1, as shown above, the results in the table presented the preferences of the participants, and the levels they thought were accommodative during the play. The result obtained revealed that when all the gaming systems are set to an equilibrium challenge level of “easy” p=0.037 a number of the participants prefer the personalised levels over the static game levels. Over 70% of the participants who took part in this study preferred this and only 30% prefers the static game levels. Moreover, this was proved when the gaming system was neutralised to a 'normal" or easy level, p=0.037 participants opted to go for the personalised level so the majority of them skipped the static level during the gameplay experiment.

Furthermore, when the systems were adjusted from normal to hard levels with a narrow margin, the majority of the participants around 40% chose to go for the personalised level over the static level with around 30% deciding not to go to the ether of the two levels (Lai et al., 2018). From the small pairwise test conducted online, we can conclude that the majority of game players preferred the personalised game levels of statics once. The personalised game levels can therefore be said that helps in improving the experience of the game during gameplay. When the levels are set to 'normal' or "easy " they could attract the majority of the participants to take the challenge but when the levels were adjusted to "hard" it attracted a narrow majority to take part in the game. This proved a significant level of perception of both the static and personalised levels from the participants (Fang et al., 2022).

Data Collections

People were recorded using the Canon Legria R606 video camera during the experiment. The videos were recorded in compression with four channels with 50p frames per second, mp3 formats andMPEG-4 video as the best formats to use for them. A watch was placed in their arms 7 cm away from their wrist that measured their heart rate at the same time. The HR was recorded at 1 percent. Additionally, in both the experiments participants were asked to fill in questions that were provided after they have finished their gaming terns.

Data processing

The data received was tabulated in a table for both the experiments and analysed before results were produced. The data from the Canon Legria R606 video camera were also analysed to verify the static deference that was employed by the players during the game experiment before the data was also analysed. Anything beyond facial expression or other factors that were not related to the research was left out in order to come up with the clear results about the research.

Chapter Four: Results and Discussion

Results

The research conducted two experiments intending to find out how to improve gamers' experience using facial expressions. The first experiment is whereby participants were invited to take part in a gaming study where they both played using the 3D and the 2D models of the game each being allowed to play at least twice to record the results from their gaming experience. And the results were tabulated as shown in figure 5 above; in the 2D game model the experiment result noted some sensory and imaginative immersion including tension from the players, this is the same scenario that was recorded when the players were asked to play the 3D game and that can be noted from Q1-Q14 as shown in figure five above. In the second experiment, we conducted an online gaming experiment whereby we invited 10 participants to take part in an online gaming study. Here we adopted two gaming systems; a personalised system and a static system. From the analysis, participants preferred the personalised gaming system over the static because the personalised system managed to balance the gaming levels when they were playing.

Discussion

Two games were created using a Dynamic Balancing System and a FER system to give players a real feel of their face when playing the game. This is a way that facial expressions can be interpreted and changed to adjust the game difficulty. The first group played the 2 D game Alien Fighter while the Second group play the 3D game Rushing Escape. One group was given a game with the dynamic balance system in it and one group didn't. A couple of samples were tested using the T-Test method. The player's experience in both the baseline and enhanced games is not different from that found in the Negative Effect variables. The result obtained from the first experiment was similar to the one from the second assignment, because of the level at which it managed to interpret the changing pattern, of the facial expression of the players. The personalisation system also had to interchange the mixed reaction from the players and hence managed to balance the game according to their expressions.

In the second experiment, the test conducted using a pairwise test revealed that user/ participants were shunning away from the static level games. The analysis recorded that about 30% of the participants nether chose the personalised mode or the static when the game levels were adjusted from Normal level to hard. The data shows that the participants decided to boycott the hard game levels. Nevertheless, around 70% of the participants chose the personalised mode at the beginning of the play when the level was set to easy and 30% chose the static mode. This could have been maybe because the statics level was too hard for them or they were not able to enjoy playing the game at that particular level. On the other hand, the players who opted for personalised levels may have decided to go for the levels because they were able to adapt to the circumstances at hand as a result, coming up with a gaming system that would be able to detect the facial experiences of the players and give that either personalised or static experience would positively work for these types of players. The purpose of this project was to come up with a better way of making all the players feel like they are part of the game to improve their gaming experience. Gamers love playing different levels that are if they have mastered the ways of the game but before then they tend to start from the lowest levels to enable them to adapt to the situation. As such enhanced facial expiration game would be the best option for most players. And that is why in our experimental setup most of the participants chose to play the personalised games because it could detect their facial expressions during the gameplay and adjust their levels according, for example, if one is angry the personalised game would just detect that and quickly change the game level to one that would sound very easy for the player. That is why the data recorded so many participants going for the personalised game mode. The static game was meant to be adjusted manually, that is why when it was changed from normal to hard no one wanted to take part in the gameplay as it sounded too difficult for them hence they chose to shun the study. However, in this experiment we did not manage to measure the levels of the participant reactions when they were playing the static games because the anger/ the frustration presented by the participants was expressed in both facial and terms of, verbal shouting, hand gesture movements, Head movements, hence these factors prevented the facial expression of the players from being recorded and measured accurately. Even though this skipped us from this experiment we still believed that the facial expression of a game player can still be assessed and traced simultaneously using additional features such as head movements and gaze since they both come from the facial tracking area. Additional verbal shouts can also be regarded as a facial expression because when a player shouts they will do it either when they are happy or angry with the result of the game. Generally, our experiment recorded facial expressions from the players /participants of the game however, we could not manage to measure the outcome because of the reasons addressed above.

Chapter Five: Conclusion

In this study, we investigated the methods that could be used to improve gamers' experience through facial expressions to improve gamers' engagement with a particular game. Hence we managed to conduct an experiment to aid in achieving the aims of the research. In the experiment, the research adopted the use of a personalised system and a static system. The personalised system had facial recognition ability hence it was able to regulate the game according to the users' reactions. However, it was only able to balance the game with the facial expression but not through other motions such as waving the hand or shouting. Nonetheless, the static system was to be adjusted manually which means it could not detect anything in form of motion or facial expression from the participants. From the first experiment, it was noted that there was no statistical difference in the player’s expression during the experiment and the results show that facial expression is indeed needed to enhance gamer's experience during their gaming mode.

Therefore, for this research, we concluded that facial expression is the main factor behind a user’s affective state of enjoying playing a game. This has been proven from our study that has shown how participants who played personalised games reacted to different levels of the game and how most of them, opted just to play the personalised game mode. Hence the pilot of our study indicated some level of effectiveness from the experience as everything managed to work effectively as was expected. The personalised game levels managed to balance game levels according to the reactions it obtained from gamers, hence it changed levels when a user expressed some state of agony and anger and increase to some difficulty level if the user was neutral to the game or happy. However, it also proves to be stable when there is too much noise such as verbal shouts from the participant. Hence how research can really on this experiment to be able to come up with a better game that will improve the experience of the players through the use of facial expression. The pairwise tests supported our analysis of the facial expression gaming experience from the number of ten participants who took part in the study, where we found that around 70% of participants preferred the personalised gaming system over the static one. However, when participants expressed their anger inform of shouting and through their hands the pairwise test did not recognise this as a facial expression hence the game level balancing did not change. Facial recognition only detects the human activities that occurred on the face of the gamer, but those that occur through the hand in form of motions are had to be noticed by the system because they are not accurate.

Moreover, there is a need to conduct future studies on this matter because there are a lot of unanswered questions that we could not manage to answer, for example, when a participant shouts the game may be able to detect the facial activity of the player and it should be able to characterise it as either happy or sad to regulate/ balance the game. Therefore, in future, the research intends to understand why the personalised system depends only on facial expression. Also, future research should be conducted to come up with solutions of how to make the personalisation system more accurate to be able to read all the emotions expressed by the user/ a game player. Dynamic balance variables can be explored in future work on 2D and 3D game models. It is possible to take the research to another series of game tests where they could also use a dynamic balancing system to analyse participants' reactions during 2D and 3D gameplay.

Reference

Bond, M., Buntins, K., Bedenlier, S., Zawacki-Richter, O., & Kerres, M. (2020). Mapping research in student engagement and educational technology in higher education: A systematic evidence map. International journal of educational technology in higher education, 17(1), 1-30.

Buil, I., Catalán, S., & Martínez, E. (2019). Encouraging intrinsic motivation in management training: The use of business simulation games. The International Journal of Management Education, 17(2), 162-171.

Carr, B. M., O’Neil, A., Lohse, C., Heller, S., & Colletti, J. E. (2018). Bridging the gap to effective feedback in residency training: perceptions of trainees and teachers. BMC medical education, 18(1), 1-6.

Chen, J., Konrad, J., & Ishwar, P. (2018). Vgan-based image representation learning for privacy-preserving facial expression recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (pp. 1570-1579).

Dawson, P., Henderson, M., Mahoney, P., Phillips, M., Ryan, T., Boud, D., & Molloy, E. (2019). What makes for effective feedback: Staff and student perspectives. Assessment & Evaluation in Higher Education, 44(1), 25-36.

De Smale, S., Kors, M. J., & Sandovar, A. M. (2019). The case of This War of Mine: A production studies perspective on moral game design. Games and Culture, 14(4), 387-409.

Fitzgerald, M., & Ratcliffe, G. (2020). Serious games, gamification, and serious mental illness: a scoping review. Psychiatric Services, 71(2), 170-183.

Fitzgerald, M., & Ratcliffe, G. (2020). Serious games, gamification, and serious mental illness: a scoping review. Psychiatric Services, 71(2), 170-183.

Gennari, R., Melonio, A., Raccanello, D., Brondino, M., Dodero, G., Pasini, M., & Torello, S. (2017). Children's emotions and quality of products in participatory game design. International Journal of Human-Computer Studies, 101, 45-61.

Grau-Moya, J., Leibfried, F., & Bou-Ammar, H. (2018). Balancing two-player stochastic games with soft q-learning. arXiv preprint arXiv:1802.03216.

Henderson, M., Phillips, M., Ryan, T., Boud, D., Dawson, P., Molloy, E., & Mahoney, P. (2019). Conditions that enable effective feedback. Higher Education Research & Development, 38(7), 1401-1416.

Hey-Cunningham, A. J., Ward, M. H., & Miller, E. J. (2021). Making the most of feedback for academic writing development in postgraduate research: Pilot of a combined programme for students and supervisors. Innovations in Education and Teaching International, 58(2), 182-194.

Kokkinidis, A., Berghäll, A., Österlund, E., & Paulsen, H. (2021). Conceal, don't feel: Representing emotional suppression through deep game design.

Lämsä, J., Hämäläinen, R., Aro, M., Koskimaa, R., & Äyrämö, S. M. (2018). Games for enhancing basic reading and maths skills: A systematic review of educational game design in supporting learning by people with learning disabilities. British Journal of Educational Technology, 49(4), 596-607.

McCutcheon, S., & Duchemin, A. M. (2020). Overcoming barriers to effective feedback: a solution-focused faculty development approach. International Journal of Medical Education, 11, 230.

MUñOZ, J. E., & Dautenhahn, K. (2021). Robo Ludens: A Game Design Taxonomy for Multiplayer Games Using Socially Interactive Robots. ACM Transactions on Human-Robot Interaction (THRI), 10(4), 1-28.

Nassaji, H., & Kartchava, E. (Eds.). (2017). Corrective feedback in second language teaching and learning: Research, theory, applications, implications (Vol. 66). Taylor & Francis.

Ninaus, M., Greipl, S., Kiili, K., Lindstedt, A., Huber, S., Klein, E., ... & Moeller, K. (2019). Increased emotional engagement in game-based learning–A machine learning approach on facial emotion detection data. Computers & Education, 142, 103641.

Pozo, T. (2018). Queer games after empathy: Feminism and haptic game design aesthetics from consent to cuteness to the radically soft. Game Studies, 18(3).

Schrader, C., Brich, J., Frommel, J., Riemer, V., & Rogers, K. (2017). Rising to the challenge: An emotion-driven approach toward adaptive serious games. Serious games and edutainment applications, 3-28.

Walk, W., Görlich, D., & Barrett, M. (2017). Design, dynamics, experience (DDE): an advancement of the MDA framework for game design. In Game Dynamics (pp. 27-45). Springer, Cham.

Winstone, N., & Carless, D. (2019). Designing effective feedback processes in higher education: A learning-focused approach. Routledge.

Yang, Q. F., Chang, S. C., Hwang, G. J., & Zou, D. (2020). Balancing cognitive complexity and gaming level: Effects of a cognitive complexity-based competition game on EFL students' English vocabulary learning performance, anxiety and behaviors. Computers & Education, 148, 103808.

Yu, Z., Gao, M., & Wang, L. (2021). The effect of educational games on learning outcomes, student motivation, engagement and satisfaction. Journal of Educational Computing Research, 59(3), 522-546.

Ninaus, M., Greipl, S., Kiili, K., Lindstedt, A., Huber, S., Klein, E., ... & Moeller, K. (2019). Increased emotional engagement in game-based learning–A machine learning approach on facial emotion detection data. Computers & Education, 142, 103641.

Sharma, K., Papavlasopoulou, S., & Giannakos, M. (2019). Coding games and robots to enhance computational thinking: How collaboration and engagement moderate children’s attitudes?. International Journal of Child-Computer Interaction, 21, 65-76.

Khazaal, Y., Favrod, J., Sort, A., Borgeat, F., & Bouchard, S. (2018). Computers and games for mental health and well-being. Frontiers in psychiatry, 9, 141.

Manca, M., Paternò, F., Santoro, C., Zedda, E., Braschi, C., Franco, R., & Sale, A. (2021). The impact of serious games with humanoid robots on mild cognitive impairment older adults. International Journal of Human-Computer Studies, 145, 102509.

Huynh, S., Kim, S., Ko, J., Balan, R. K., & Lee, Y. (2018). EngageMon: Multi-modal engagement sensing for mobile games. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2(1), 1-27.

Huynh, S., Kim, S., Ko, J., Balan, R. K., & Lee, Y. (2018). EngageMon: Multi-modal engagement sensing for mobile games. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2(1), 1-27.

Akbar, M. T., Ilmi, M. N., Rumayar, I. V., Moniaga, J., Chen, T. K., & Chowanda, A. (2019). Enhancing game experience with facial expression recognition as dynamic balancing. Procedia Computer Science, 157, 388-395.

Gamage, V., & Ennis, C. (2018, November). Examining the effects of a virtual character on learning and engagement in serious games. In Proceedings of the 11th Annual International Conference on Motion, Interaction, and Games (pp. 1-9).

Chen, J., Konrad, J., & Ishwar, P. (2018). Vgan-based image representation learning for privacy-preserving facial expression recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops (pp. 1570-1579).

Hileman, J., & Bodin, Ö. (2019). Balancing costs and benefits of collaboration in an ecology of games. Policy Studies Journal, 47(1), 138-158.

Grau-Moya, J., Leibfried, F., & Bou-Ammar, H. (2018). Balancing two-player stochastic games with soft q-learning. arXiv preprint arXiv:1802.03216.

Yang, Q. F., Chang, S. C., Hwang, G. J., & Zou, D. (2020). Balancing cognitive complexity and gaming level: Effects of a cognitive complexity-based competition game on EFL students' English vocabulary learning performance, anxiety and behaviors. Computers & Education, 148, 103808.

Cai, J., Meng, Z., Khan, A. S., Li, Z., O'Reilly, J., & Tong, Y. (2018, May). Island loss for learning discriminative features in facial expression recognition. In 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018) (pp. 302-309). IEEE.

Zhang, F., Zhang, T., Mao, Q., & Xu, C. (2018). Joint pose and expression modeling for facial expression recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3359-3368).

Zhang, F., Zhang, T., Mao, Q., & Xu, C. (2018). Joint pose and expression modeling for facial expression recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3359-3368).

Lai, Y. H., & Lai, S. H. (2018, May). Emotion-preserving representation learning via generative adversarial network for multi-view facial expression recognition. In 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018) (pp. 263-270). IEEE.

Fang, Z., Liu, Z., Liu, T., Hung, C. C., Xiao, J., & Feng, G. (2022). Facial expression GAN for voice-driven face generation. The Visual Computer, 38(3), 1151-1164.

Continue your journey with our comprehensive guide to Advancements in Ultraprecision Machine Tool Design for Miniature

- 24/7 Customer Support

- 100% Customer Satisfaction

- No Privacy Violation

- Quick Services

- Subject Experts